04.1 (conspect) Cache

What is it – cache?

- Memory cache

- Display the actual part of the data scheduled in a fast device. Synchronically data. Cache - is a high-speed storage layer at which the required data set is typically of a temporary nature.

- Example of caching

it is exchange data with disk, but nobody remembers about it anymore. Several times ago, it was a critical component of the operating system. The hard drive is a million times slower. Operating systems that are heavily tied to constant disk sharing have suffered from having to do a lot of things all the time. A (real) hard drive works like this: there is a surface (flat pancake) with heads, and to read the data you first have to move the head horizontally on the track and wait for the track to spin to the desired area. And even if it's fast, it's still very slow

compared to RAM operation. It is millions of times faster.

compared to RAM operation. It is millions of times faster.

The solution to this problem is to allocate a piece of RAM to read data from it and write into it while the processor is doing nothing. Data from five is written to disk. When we are done or have written too much, the content (cache) is written to memory.

There are two modes of cache operation:

- write cache

- read cache

Caching data on a floppy disk in the early days was very useful because the disk speed is slower than the RAM speed. And if a piece of RAM is used to exchange with the disk, we open the file and start reading from there. If we change something, we write it into memory - and the operating system will do it later. And there are disadvantages:

- The main drawback is that the more our cache is 'hot' (we should release it when we have changed something but haven't synchronized it to the disk), the more dangerous is connection interruption. When we cache not files but disk operations - part in new, part in old and part in data.

- The second problem is that if someone works with the drive bypassing the cache, everything in the cache is perpendicular to them at that point. We have done/changed something, and that's not relevant anymore.

- The third, all operating system components that worked with the disk now work through the cache.

Speed:

There are two different speed parameters: Speed - there are two different speed parameters - response time and bandwidth. If we say the drive is slow - we say both things - at this point we wait for it to read the data - this is response time. The read operation is not happening. Bandwidth is a metric characteristic that shows the ratio of the limit quantity. Example of Bandwidth: 1 Megabyte per second - even if the drive is physically fast and makes positioning operations fast or reads the entire track at once (like modern drives) - there are different drive spins - very fast 5400, 7200 and even more spins per minute. 7200 - reads everything we need in a second. However, even if the drive is like this, but if it is connected to a car with 1 Megabyte per second - the speed will still be 1 Megabyte per second.

There is another third thing, which in the case of CAOS is a technological problem - the third property of the cache is when it is faster than a slow disk - we can write a large piece of data quickly and read a small one. We can't read only 1 byte from the disk. So, if we read each byte directly from the disk by sectors - each reading of a sector is very slow. We have read a whole Megabyte of kilobytes and we need a byte from there - that's fine. The situation when the External Devices are designed to read in large pieces and then we crush them. Our slow devices, in an effort to get the job done faster, communicate in large pieces.

And why is the fast memory cache smaller than the device we cache on the slow one?

Perhaps we can intuitively assume that fast devices are undoubtedly more expensive in terms of money because of technology or high power consumption. RAM uses less power than hardware memory (processor memory). And the second - in fact, the more memory, the slower it is. We have 5 wires - reference to the register and so we get the address. And now the real OP, which doesn't only store, for example, 64-bit address, but also the memory organization itself doesn't allow you to set 32 wires and the size of cells - hardware circuitry that includes 32 wires turns it all into a circuit and reads from there. The difference is that we can use 5 wires and circuitry around them or we can use 2 in 32 pieces. Especially if our memory requires a search - not addressable, but actual - the search procedure is linear. From here the search time is increased. Far not always the size of the cache is smaller than the size of the device cache.

!!! We rely on the data we need and our next conversation will be to define the concept of relevance.

Two not universal principles of computer architects - our data is arranged in such a way - they are the display of what is topicality:

- * Temporary locale

- if we have referred to some cell(s), it implies that we will refer to it again.

Example for code: loop; Example for data: array.

- * Spatial address locale

- we address some cell in memory and it follows that we address the next cell later.

Example for code: after this instruction the following will be executed; Example for data: any data array, stack.

- Brunch Predictions

- predict which cell we'll do next. Let's cache the beginning of the cycle in memory and not the continuation.

Equidistant references

A very frequent situation: we address a cell with the address n, then (n+16), then (n+32) and so on - this is an example when any array of complex data - an array of structures looks like: read the structure of field 'A' and then read the next structure of field 'A' and then compare it. In memory, we are climbing through the exact step shift. However, until we start addressing memory, nothing follows which step we are walking with. What should we cache? Answer: The data we've already read have been accessed because the principle of temporal localization says that what we've accessed we'll do again. And there's a third of any pattern that's more complex than addressing the next cell - we can predict what we'll do - what step through.

!

The cache isn't rubber and it's gonna run out one day... - what should we throw away? On what principle should we do that?

Algorithm for obsolescence of data

Remember that we must always do a cache search - this is the weakest place!!!! Strategies:

Random: Accidentally throwing something out of there - it works less but not very well. You could accidentally throw away what we need.

LFU (less frequently used): The strategy is ideal when we keep the most frequently used data in the cache and throw away what we use less often. We count how many times we have accessed it - we get the frequency of access and when we re-fill it we throw out the data with the lowest frequency. However, here we add two additional operations - addition and division (not quite) - these calculations at the hardware level. This strategy is capricious - it depends on the nature of the data - is not used often.

LRU (less recently used): we throw out not the cells of frequencies that are the smallest, but those that we have not addressed for a long time. It's easier to lay down - does not require division - only requires a time counter.

FIFO: Phyto Methods of data ordering: queue. We do it hardware. It's as efficient as random obsolescence - but a little better. Why? It's local. Probability that we throw out not necessary much less - if the cache is large then the last cell in this queue is not needed.

FIFO+LRU: combining the queue with the principle of throwing out the oldest cell. When we add a cell to the queue, we look for it in Kesha - if. If there is, we move it to the beginning. No counters - if there is a search - we can simply add a new cell to the queue instead and update its status. With computational complexity - the easiest and most efficient.

- Cache hit(Getting into the cache)

- is a situation where we access memory and data for caching.

- Cache miss

- access to non-cached element.

What's been cached?

1 memory cell is expensive. We should know the counter (for LRU), the address of the cell (30 bits) and say the cell (32 bits) - why 30 bytes? The address is a multiple of 4, and we store an entire machine word - we do not remember the last zeros.

| 00 | 10 04 00 00 | a0 78 4f 95 |

On 32 data - it is 38 age + address - it is a lot. We can mean it by the principle of localization then we can cache not one, but many consecutive cells. So we enter the concept of a cache string - it's some machine word (256) with metadata (age and address). Why the address is 22 bits - because we store the address (a0 78 4f 95 ... 34 45| 8000 ) then the total is 1024 bytes - 10 bits extra . We don't have to store the whole address, we store the smaller part.

- Tag

- is to store only a significant amount of memory that we're going to cache.

In OS, it's a piece of running data. The ratio of data to metadata is human. Modern caching uses strings. Statistically, it's justified. Caching tactics:

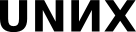

- Direct caching is the easiest in terms of hardware organization. The idea behind this picture is as follows: there are 4 lines and there are areas of RAM nailed down which can be cached. When we address the RAM to address 8, it is cached at 0 line of code and we put it in a tag - what we took from line 8 is a piece of memory. We only store the number of the line from which part to take - we have an example from part 2. on the principle of localization - the cache line, if we went to piece 4, then most likely we will go to piece number 5.

Burning out the cache: is when the cache doesn't match what we want. But on the principle of spatial locale, we won't do that. If our program breaks the spatial localization - the direct cache is not effective. Hardware implementation is very simple - do we take the data, calculate the tag, compare and on this basis decide whether the data is cached or not? Deficiency - if the principle of temporal locality is violated, it works badly.

By the way, any PC breaks the principle of spatial localization as it is any preemptive multitasking - and this is a little less than all the PCs in the world. The OS has a kernel and several tasks are running - and when we switch from code to code, from a system call to another call - this principle is broken.

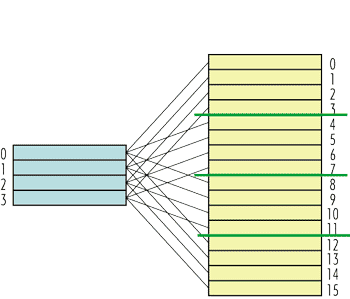

- Associative cache - so that each line can contain any line from memory. We turned to the piece - replaced it with an old piece. But! Unlike the previous one, a comparison operation is added. In direct access, we know exactly which string can lie and compare only tags. In this case we need to check/find where the corresponding tag lies. Cache, which provides the procedure for finding a tag. It's used on small volumes. It is resistant to violation of spatial localization principles. But we need a search.

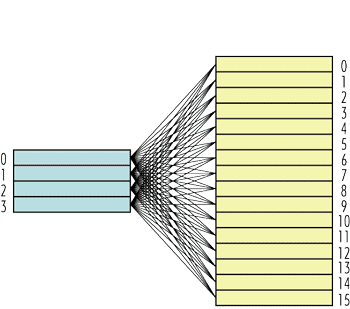

We combine two principles.

- Multi-associative cache - it is fast to store data and supports multiple locales to maintain associativity. Contains several 'banks' - one 'bank' acts as a direct access cache and is searched in associative style - select from 'banks' the one whose line is obsolete, throw it away and write the new one. When we write down the line again we check it by tag and then we get it, plus that we don't do a full search on the cache - only search on 'banks'. How many 'banks' is the complexity of 'banks', how many 'banks' is the size of the cache. There are several locale zones (two in our example in the picture - as many 'banks' as we have) all that lies in one place - in '1 bank', which in '2 bank'. There are more processes than 'banks' in the cache and the size of the 'bank' is large - one locale can be in one cache. Modern caches are just that.

Write cache

Increases speed and is able to increase more than the reading cache. It's easy to break. 1 If we write to memory and don't read from it - we lose one cache hit if we don't write what we save to memory and then go there - if we duplicate the read and write operation - it's hard hardware. You can read in parallel but you cannot write in parallel. It is inefficient in case there is no cache to write - the question is: is it possible to cache something if it is written? The answer is no. Write back: fundamentally use the cache for recording and only then synchronize its status in memory 'Hot Cache': when the contents of the cache do not match what is contained in memory.